Last Updated on February 2, 2024 by Rachel Hall

This subject is close to my heart, and this post might be a little less light-hearted than what you guys are used to seeing here. Be that as it may, I need to let this out.

It might seem counterintuitive, but the way that some people treat their potential or current sexual partners is atrocious. We all know someone whose partner cheated on them with their best friend/mother/favourite dog walker, and often, bad treatment goes beyond ‘shitty’ and straight to ‘actually abusive’. Here’s a fun thought exercise: think about the first time you were sexually harassed, and then try to recall the most recent time it happened.

My first time was before I started secondary school, and most recently was a few weeks ago. A recent study in the UK found that 97% of women have been sexually harassed and 45% of women have been sexually assaulted or raped. A similar study on men found that 50% of them have had an ‘unwanted sexual experience’.

Here’s the thing: if you love someone, you don’t do that to them.

If someone loves you, they won’t do it to you. And people who harass and assault others should face consequences for their actions. Now, we are long overdue for a reckoning on how various criminal justice systems handle sexual offenders. In the USA, 25 out of every 1000 rapists are jailed while back in the UK the number of prosecutions for rape is falling.

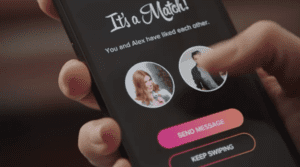

Now, most of us aren’t politicians, lawyers, or police officers. We can vote for politicians who prioritise justice for survivors of sexual violence, advocate for programs that reduce sexual violence and harassment. But often the most practical thing we can do is make sure the people around us are educated on consent and call them out when their behaviour becomes unacceptable. This is something Tinder is trialling with their new AI, designed to notice inappropriate messages.

When people think of sexual harassment a lot of them imagine something where both parties are physically present, like catcalling or groping. Whilst these are both serious problems, so is online harassment. It’s incredibly depressing to realise that any method people use for communication will inevitably be used to make someone feel uncomfortable.

Nowadays, with smartphones and the internet, it’s never been easier.

Without these things in play, someone can close their door and enjoy peace, but with a phone by your side, anyone with an internet connection anywhere in the world can send you an unwanted and inappropriate message.

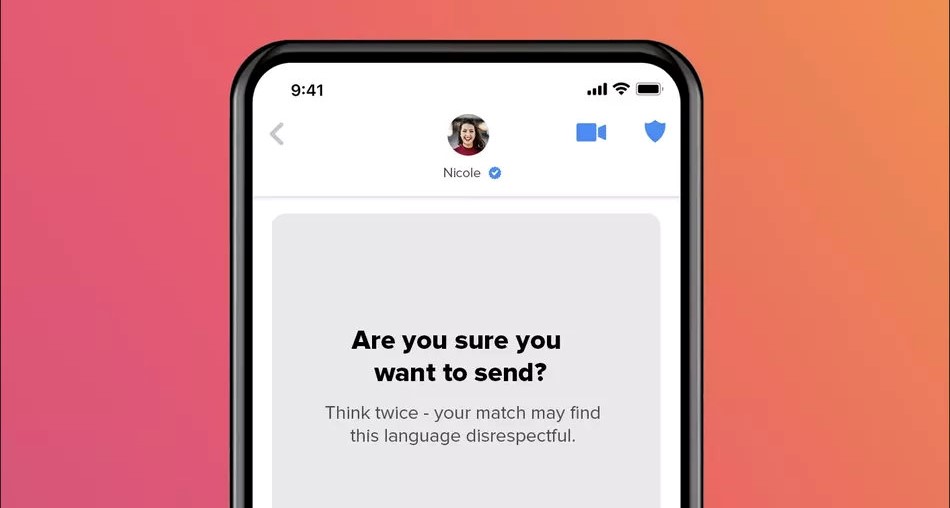

There are two parts to Tinder’s approach. The first one targets the person sending the message. The AI is designed to notice certain words or phrases, and if the person is seen using them, they’ll get a nudge that asks ‘are you sure?’. Tinder is referring to this as AYS.

If they proceed and send the message anyway, the person who receives it will get a nudge asking ‘does this bother you?’. If they are upset they can report the message to Tinder. Tinder will monitor trends to make sure that any new words and phrases are added to the AI as they come into vogue.

So does it work?

It’s in its early days, but results from the trials seem positive. 10% of users who saw AYS nudges changed their mind, and in the following month were less likely to be reported by other users, which suggests that reminders and accountability may change long-term behaviour. There was also a 46% increase in reports of inappropriate messaging when the AI asked users ‘does this bother you’ when they received a message considered inappropriate by the AI.

Again, this has only just been rolled out so, although initial results look promising, it’ll take a while to see if Tinder sticks with this and if it impacts dating trends long term. It’ll also be really interesting to find out if this affects face-to-face interactions: perhaps, after a while, someone who has regularly received AYS nudges might internalise those ideas and realise that yelling at someone from a car is just as bad as sending someone a creepy message.

Tinder might not overhaul the criminal justice system in every country it operates in, but the AYS system holds people accountable for their actions and gives anyone who receives an inappropriate message a way to protect themselves. There’s no victim-blaming or victim-shaming: the responsibility and consequences are given to the perpetrator, which is crucial.

Ultimately, we’ll have to wait and see what happens, but the latest innovation from Tinder seems like a good idea. The results are promising and perhaps other dating apps will follow Tinder’s example and help keep their sites safer for everyone.

Rachel Hall, M.A., completed her education in English at the University of Pennsylvania and received her master’s degree in family therapy from Northern Washington University. She has been actively involved in the treatment of anxiety disorders, depression, OCD, and coping with life changes and traumatic events for both families and individual clients for over a decade. Her areas of expertise include narrative therapy, cognitive behavioral therapy, and therapy for traumatic cases. In addition, Rachel conducts workshops focusing on the psychology of positive thinking and coping skills for both parents and teens. She has also authored numerous articles on the topics of mental health, stress, family dynamics and parenting.

Leave feedback about this